Shortest Masterclass on Understanding AI: A Journey from Comparing Cars to Understanding Neural Networks

If I ask you - “is Maruti Swift similar to Hyundai i20 or BMW X7”

Though answer is simple. But how you and your brain answer it?

You mentally compare features between Swift(our query) and i20. And you mentally derive a similarity score.

Then you compare the features of Swift and X7 and then you again come at a similarity score.

These features could be Price, Engine CC, Brand Value, etc.

Now i20 would have a very high similarity score (say 0.88) and X7 would have less(say 0.56), right?

Answer — “Swift is similar to i20”.

Congratulations! intuitively you understood some fundamental concepts of ML/AI:

- Dimension: unique feature or attribute — like Price, Engine CC, etc.

- Parameter: An algorithm understands only numbers. Period. So a corpus of text undergoes several layers of deep learning(DL) model like GPT. The DL models identify features and patterns -> based on which complex algos identify what biases and weights should be used in complex equations. These learned values of biases and weights are called parameters. In our car example, Our brain’s neural network would have done something complex like this.

GPT3 has 175 Billion parameters

GPT4 has freaking 1.7 trillion parameters (Yes Trillion with a T). This explains why GPT models are so GPU hungry all the time :-P

- Embedding: A deep learning algo uses these billions and trillions of parameters to convert those simple words into verbose array of floating numbers. Think of these array of numbers as coordinates for a multi-dimensional space. When you use these coordinates(formally called vectors), you can embed that text in the space. That’s what embedding is. So we have converted text into numbers. What do we do with these embeddings(numbers) now? Some geometry & trigonometry!!

- Cosine Similarity: We identify similarity between two numbers(aka coordinates) by drawing lines from origin to that coordinate(side note: this line is called a Vector). Then we find the degree between those 2 lines and take cosine value of that degree. Why cosine? Because cos(0) is 1. And 0 degree means 2 lines are identical. So similar texts have a lesser degree between their embeddings. In car example, Swift’s embedding is nearer to embedding of i20 and far from X7 and even farther from a chair!

Congratulations! Now you understand how Netflix recommends shows (when they will show squid game S2🤯). And also how LLMs(like GPT, Bard) use this swiss army knife under the hood.

What’s next?

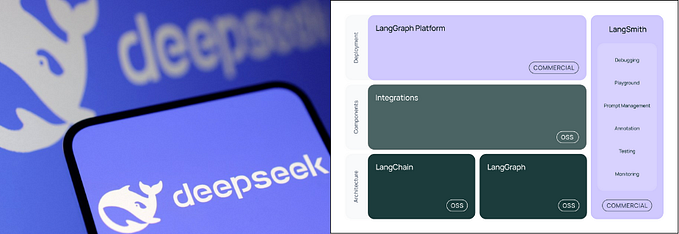

(Hint: Implement all of the above in just 3 lines of code using Langchain!!)

(Fun Fact: I was shocked when I saw the results of these lines)

Previous Post: How to Create your own ChatGPT in 2 lines?

Follow me on this adventure of #AI, #Langchain, and #LLM.